Table of Contents

Deep Neural Networks (DNNs) have emerged as a groundbreaking technology in artificial intelligence and machine learning. These networks mimic the intricate interconnections found in the human brain, leading to remarkable advancements across various domains.

What are Deep Neural Networks?

Deep Neural Networks, also referred to as deep learning models, are a subset of artificial neural networks distinguished by multiple hidden layers. These networks employ a hierarchical approach to learning, allowing them to extract intricate patterns and features from vast datasets. Unlike shallow networks, DNNs effectively handle high-dimensional data, making them ideal for solving complex problems across diverse domains.

Working

Deep Neural Networks consist of interconnected layers, each comprising multiple artificial neurons or nodes. These layers can be broadly categorized into three types: the input layer, hidden layers, and output layer. The input layer receives initial data, which is then processed through the hidden layers using weighted connections. Finally, the output layer generates predictions or classifications based on the learned patterns.

Advantages

Deep Neural Networks offer several advantages that make them the preferred choice for numerous applications. Firstly, their ability to automatically learn hierarchical representations eliminates the need for manual feature engineering, saving time and effort. Secondly, DNNs efficiently handle large datasets, resulting in improved accuracy and better generalization.

Deep learning models excel at solving complex problems like image recognition, natural language processing, and speech recognition, outperforming traditional algorithms.

Applications of Deep Neural Networks

1. Computer Vision: Deep Neural Networks have revolutionized computer vision tasks such as object detection, image segmentation, and facial recognition. They have enabled breakthroughs in fields like autonomous vehicles, surveillance systems, and medical imaging.

2. Natural Language Processing (NLP): Deep learning models have transformed NLP applications, including sentiment analysis, machine translation, and chatbots. DNNs excel at understanding and generating human-like language, leading to more accurate and contextually relevant results.

3. Healthcare: Deep Neural Networks have made significant contributions to medical research, disease diagnosis, and personalized treatment plans. They have demonstrated exceptional performance in tasks such as cancer detection, drug discovery, and genomics analysis.

4. Finance: Deep learning models are increasingly employed in financial institutions for fraud detection, credit scoring, and algorithmic trading. DNNs can analyze vast amounts of financial data and identify patterns that human experts may overlook, enhancing decision-making processes.

Deep Neural Networks have revolutionized problem-solving approaches and have become indispensable across various industries. By learning from data and extracting valuable insights, DNNs are driving innovation and propelling us toward a future filled with advanced technologies. Embracing the power of deep learning opens doors to endless possibilities, making it an exciting field to explore and master.

FAQ

What is a Deep Neural Network (DNN)?

A Deep Neural Network (DNN) is a type of artificial neural network (ANN) that consists of multiple layers of interconnected artificial neurons or nodes. It is designed to mimic the structure and function of the human brain, particularly the way information is processed and learned through hierarchical representations.

How does a Deep Neural Network differ from a regular Neural Network?

The key difference between a Deep Neural Network and a regular Neural Network lies in the number of layers. While a regular Neural Network typically consists of only one or two hidden layers, a Deep Neural Network has multiple hidden layers, often ranging from three to hundreds or even thousands of layers.

What are the advantages of using a Deep Neural Network?

Deep Neural Networks offer several advantages, including:

- Increased learning capacity: The multiple layers allow for the extraction of hierarchical representations, enabling the network to learn complex patterns and relationships.

- Feature extraction: Deep Neural Networks can automatically learn and extract meaningful features from raw input data, reducing the need for manual feature engineering.

- Improved accuracy: The hierarchical representation and increased capacity make DNNs capable of achieving higher accuracy in various tasks, such as image recognition, speech recognition, and natural language processing.

What are some popular architectures used in Deep Neural Networks?

Several popular architectures used in Deep Neural Networks include:

- Convolutional Neural Networks (CNNs) for image recognition and computer vision tasks.

- Recurrent Neural Networks (RNNs) for sequential data processing, such as natural language processing and speech recognition.

- Transformer Networks, such as the Transformer model used in applications like machine translation and language understanding.

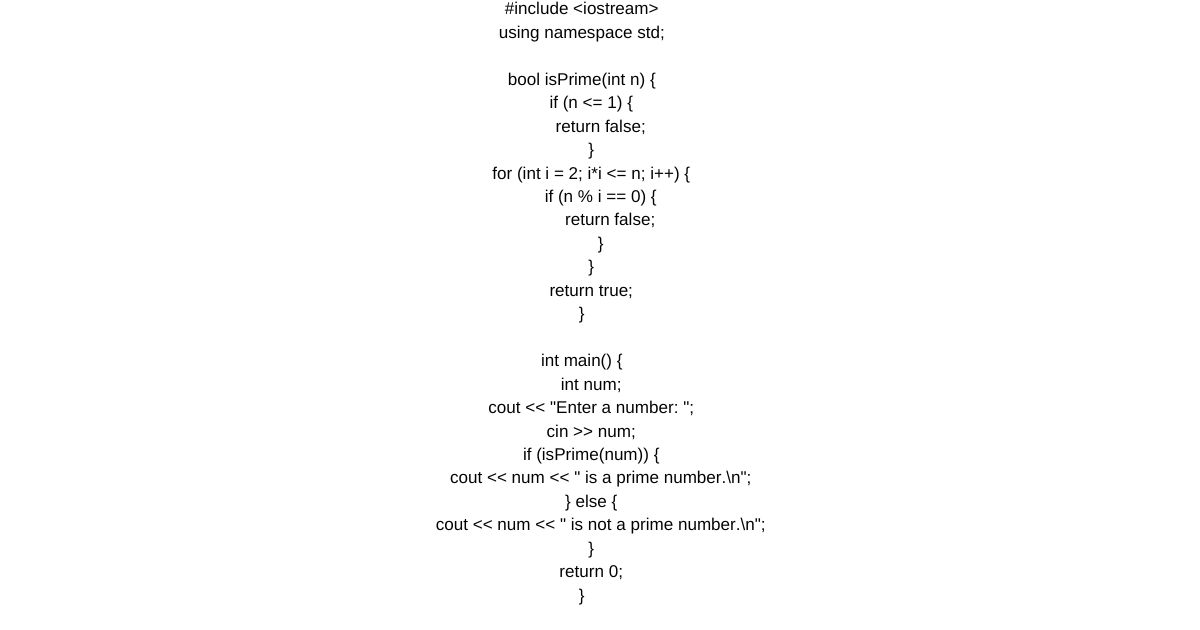

How are Deep Neural Networks trained?

Deep Neural Networks are typically trained using a method called backpropagation, coupled with gradient descent optimization. During training, the network is exposed to labeled training data, and the weights and biases of the network are iteratively adjusted based on the error or loss calculated between the predicted output and the true output. This process continues until the network’s performance reaches a satisfactory level.

What is the problem of vanishing/exploding gradients in Deep Neural Networks?

The vanishing/exploding gradient problem is a phenomenon that can occur during the training of deep neural networks. It happens when the gradients used to update the network’s weights during backpropagation either become too small (vanishing) or too large (exploding) as they propagate backward through many layers. This can lead to difficulties in training deep networks and affect their convergence.

How can the vanishing/exploding gradient problem be addressed?

To address the vanishing gradient problem, techniques such as weight initialization strategies (e.g., Xavier or He initialization), activation functions that alleviate the saturation issue (e.g., ReLU or variants), and normalization techniques (e.g., batch normalization) can be used.

To mitigate the exploding gradient problem, gradient clipping is often employed, which limits the magnitude of the gradients during training. This prevents them from becoming too large and destabilizing the learning process.

What is transfer learning in Deep Neural Networks?

Transfer learning is a technique in which a pre-trained Deep Neural Network, trained on a large dataset or a different but related task, is used as a starting point for a new task or dataset. By leveraging the learned features and representations from the pre-trained network, transfer learning can help improve the performance and reduce the training time for the new task, especially when the new dataset is limited.

What are some challenges associated with Deep Neural Networks?

Some challenges associated with Deep Neural Networks include:

- Large amounts of training data: Deep Neural Networks generally require a significant amount of labeled training data to learn meaningful representations effectively.

- Computational resources: Training deep networks can be computationally expensive and time-consuming, requiring powerful hardware resources, such as GPUs or specialized accelerators.

- Overfitting: Deep networks are prone to overfitting, which occurs when the model performs well on the training data but fails to generalize to new, unseen data. Regularization techniques and proper validation strategies are often employed to mitigate this issue.

How can Deep Neural Networks be interpreted or made more explainable?

Interpreting Deep Neural Networks and making them more explainable is an active area of research. Some approaches include:

- Feature visualization: Techniques that aim to visualize and understand the learned representations in the intermediate layers of a deep network.

- Attention mechanisms: These mechanisms help identify the important parts of the input that the network focuses on when making predictions.

- Layer-wise relevance propagation: It assigns relevance scores to each input feature, highlighting their importance in the network’s decision-making process.

- Rule extraction: Methods that attempt to extract human-interpretable rules or decision trees from trained deep networks.

References

1. LeCun, Y., Bengio, Y., & Hinton, G. (2015). Deep learning. Nature, 521(7553), 436-444.

2. Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press.

3. Schmidhuber, J. (2015). Deep learning in neural networks: An overview. Neural Networks, 61, 85-117.

4. Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems (pp. 1097-1105).

5. Esteva, A., Kuprel, B., Novoa, R. A., Ko, J., Swetter, S. M., Blau, H. M., & Thrun, S. (2017). Dermatologist-level classification of skin cancer with deep neural networks. Nature, 542(7639), 115-118.

Also, read the Internet of things

Comments on “Deep Neural Networks: Complete Comprehensive Guide 2024”

Comments are closed.